Vivid Visualization | Reinforcement Learning

Introduction

Advancements in deep reinforcement learning and the need for large amounts of training data have led to the emergence of virtual-to-real learning. Computer vision communities have shown keen interest in it. 3D engines like Unreal have been used to generate photo-realistic images that can be used for training deep neural networks.

The underlying idea behind using visualizations for reinforcement learning is analogous to a video game. In a game, the player is rewarded for making right choices and penalized for making wrong choices (or moves in a virtually rendered environment); the rewards and penalties are based largely on the player’s interaction with the virtual landscape and the non-playable characters.

The 3D virtual environments, thus created, have found application in different learning tasks, such as:

- Autonomous driving

- Collision avoidance

- Image segmentation

Virtual Environment for Visual Deep Learning (VIVID)[1]

Many open-source environments are readily available for use, but they either provide relatively smaller landscapes or have limited entity-environment interactions. To better facilitate learning via visual recognition, VIVID (Virtual Environment for Visual Deep Learning) is utilized because it can render larger scale, diversified indoor and outdoor landscapes.

Another advantage that VIVID provides is that it can leverage the human skeletal system to simulate different complex human movements and actions. Potential applications of VIVID include:

- Indoor navigation

- Action recognition

- Event detection

Architecture

The VIVID system has the Unreal 3D Engine at its core. Unreal is a proprietary rendering engine by Epic games, first showcased in 1998. It has since been used widely in various high-profile video game titles like Gears of War and PUBG. It’s also been used in filmmaking to create virtual sets. [2]

Hardware simulations are powered by AirSim. [3] This is an open-source simulator for cars, drones, etc. that is based on the fourth generation of Unreal Engine.

PLAYER CONTROL: This governs the main character – the entity that is rewarded or penalized for different actions and interactions.

Three Character classes are provided in VIVID:

- ROBOT: The Unreal Mannequin model.

- DRONE: A simple flying entity. (AirSim simulation is used for more realistic interactions.)

- CAR: AirSim simulation models.

NPC CONTROL: Non-Playable Characters that form the other entities with whom the player interacts.

- Blueprint (a visual script function, native to Unreal) is used to program complex NPC behavior.

- Complex human actions are animated by the Unreal animation editor.

- Unreal also provides an extra NPC API to spawn NPCs at defined coordinates and perform predefined actions via RPC calls.

SCENES: The landscape/environment. It may be indoors or outdoors, e.g. city, parking garage, etc.

- The Unreal Engine offers a large community of designers and a large choice of landscapes.

- Special events can be pre-defined (e.g. forest fire rescue, gun shooting).

DEEP LEARNING API : This allows the VIVID system to communicate with a variety of deep learning libraries.

- Microsoft RPC (Remote Procedural Call) by AirSim is used to communicate with different programming languages via TCP/IP.

- This offers a choice of different deep learning libraries that can be incorporated as long as the base language is supported by RPC.

- It also provides the option to setup distributed learning.

Applications

VIVID finds applications in deep reinforcement learning, action recognition, object recognition, semantic recognition, and video event recognition:

- Indoor navigation: Robot and drone navigation simulations.

- Event recognition: Real-life events where identification is critical but there is fewer training data available, e.g. a gunman on a road or a school shooting.

- Action recognition: An advantage of using VIVID is that it can recognize 3D human actions owing to the virtual environment. In contrast, real-world videos can only show 2-dimensional imagery. VIVID facilitates action recognition by creating a range of free action models on MIXAMO.

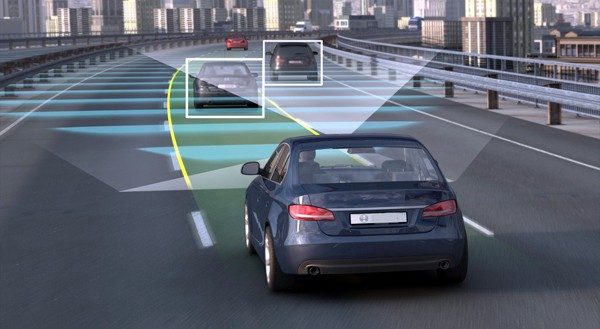

- Autonomous driving: It also supports the training of autonomous driving algorithms.

Figure 6: Training of autonomous driving algorithms

Sources

- VIVID: Virtual Environment for Visual Deep Learning – Kuan-Ting Lai , Chia-Chih Lin , Chun-Yao Kang , Mei-Enn Liao , Ming-Syan Chen National Taipei University of Technology, National Taiwan University Taipei City, Taiwan.

- Wikipedia: Unreal Engine Video Games

- Wikipedia: AirSim

Authored by Nishant Sangwan, Analyst at Absolutdata