Statistictionary | ML Drift Analysis Through MLOps

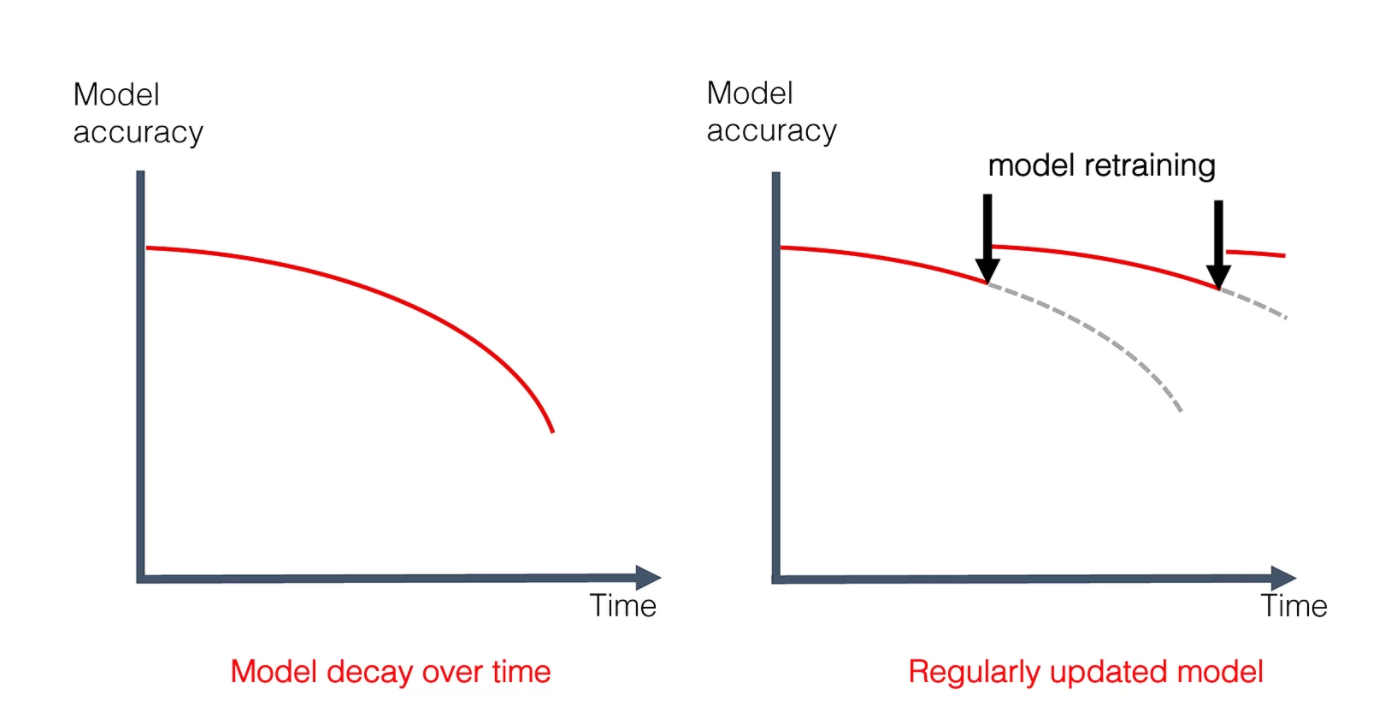

Drift is the deterioration of a model’s performance as a result of changes in real–world factors. The performance of any model is as good as the data it is trained on, but the world is dynamic, and data is constantly changing. This results in a gradual degradation of a model’s predictive power when compared to its performance during the training period; it means the model may need to be retrained.

Source: Machine Learning Monitoring, Part 5: Why You Should Care About Data and Concept Drift

Types of Drift

Model drift can be further classified into:

- Concept Drift

Concept drift occurs when there is a change in the relationship between input variables and the target variable. For example, the annual seasonal weather shift prompts consumers to buy warm coats in colder months, but the demand decreases once temperatures rise in spring and then increases again in the winter. - Prediction Drift

Prediction drift is a significant change in the distribution of the predictions (label or value), indicating a change in the underlying data. Having ground truth/labels is not required for prediction drift.

Detecting Drift

Drift detection can be done using either of the following two techniques:

- Statistical measures: This approach uses statistical metrics, as they are easier to implement and comprehend. These are also easier to validate and have found a wide variety of uses in several industries.

Let’s take a look at some of the statistical measures that can be used to detect drift:

a. The Kolmogorov-Smirnov (KS) test compares two different populations based on their cumulative distribution. In this case, the two populations to be compared are training data and post-training data.

In the context of drift, the KS test has a null hypothesis that the distributions from both datasets are identical. If this hypothesis is rejected, we can safely conclude that the model has drifted.

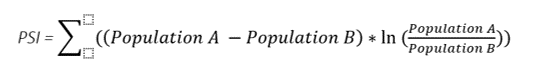

b. The Population Stability Index (PSI) assesses population changes over time. It detects model drift by monitoring population characteristics according to the following formula:

- If PSI is less than 0.1, there’s no substantial change to the population and the existing model is still relevant.

- If PSI is greater than 0.1 but less than 0.2, there’s been some change and the model should be adjusted accordingly.

- If PSI is greater than 0.2, there has been a major population shift and the model should be retrained.

Source: Population Stability Index

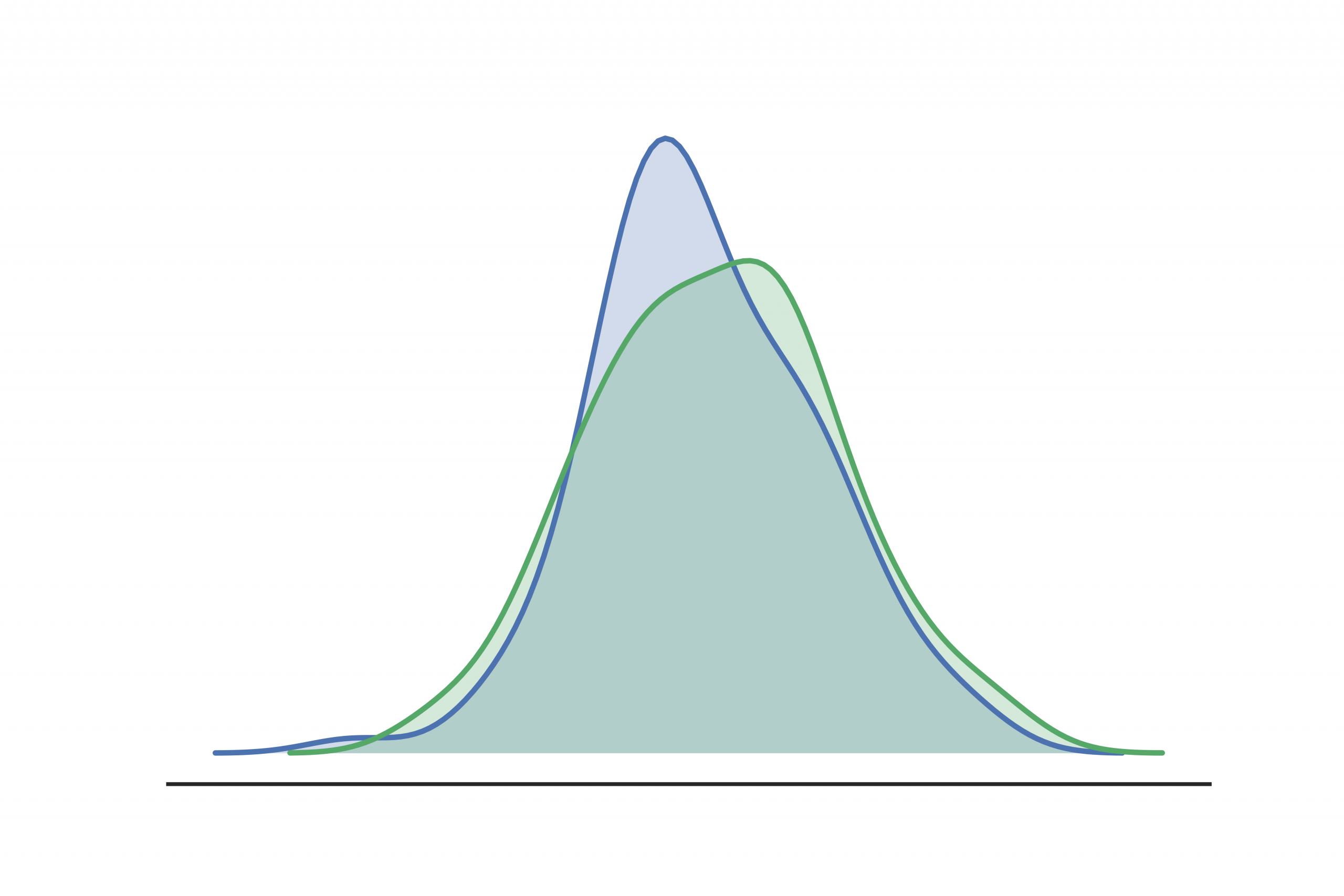

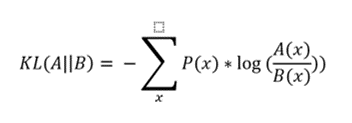

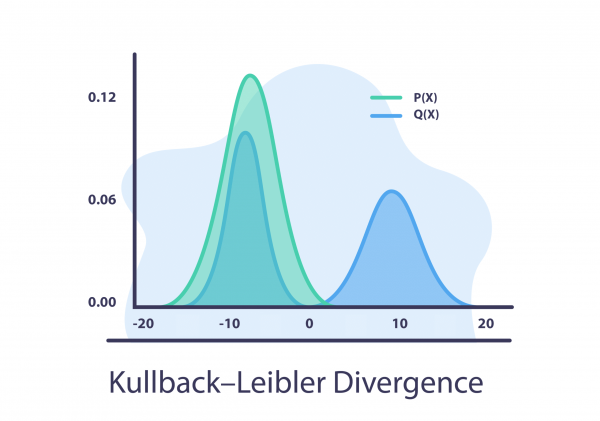

c.The Kullback–Leibler Divergence gauges statistical distance; it’s also known as relative entropy. It calculates the difference between two probability distributions.

If A is the distribution of the old data and B is the distribution of the new data, then:

In the above formula, || represents the divergence.

- if P(x) is high and Q(x) is low, the divergence between the data distributions is high.

- If P(x) is low and Q(x) is high, the divergence is more moderate but still significant.

- If P(x) and Q(x) are somewhat alike, the divergence between the distributions is low.

Source: 8 Concept Drift Detection Methods

2. Measuring the Accuracy of the Model

This approach entails training a classification model, which will identify any similarities between data points in separate sets. If the model finds few differences, there’s little drift. But if it does identify changes between data points, then drift is probable.

MLOps and ML Drift

Due to drift, there is gradual decay in the performance of the model over an interval of time. This can be resolved by periodically retraining the model on a fresh batch of data. Retraining models in shorter intervals can be rather tricky.

MLOps simplifies this process by automating model retraining. This automatic retraining can be run as a scheduled process using pre-existing data pipelines.

Optimal training frequency depends upon several external factors. However, constant monitoring at regular intervals is the best way to determine model drift and training frequency.

References

Aporia.com: Concept Drift in Machine Learning 101

Aporia.com: Why Monitor Prediction Drifts?

Towards Data Science: How to Detect Model Drift in MLOps Monitoring

Arize.com: Model Drift: Guide to Understanding Drift in AI

Authored by: Abhishek Bisht, Analyst at Absolutdata