Experience Extended | Interpreting Machine Learning Models Using Shapley Values

Introduction

Some machine learning models (such as neural networks, gradient boosting, and ensemble methods) are called “black-box models” due to their lack of explainability. Though these models have an extremely high accuracy, sometimes other models (e.g., linear and logistic regression) are preferred. This is due to their understandable variables and clarity in explaining feature interactions through model coefficients, P-values, etc.

In such cases, Shapley values can be extremely useful. This is a game theory solution that involves distributing both gains and costs fairly to all the players in a coalition. Shapley values are also used in other domains, such as attribution modeling in web analytics, economic models, and fair division problems. These values help in explaining the feature importance of model by comparing different sets of outputs and attributes relative importance to each feature.

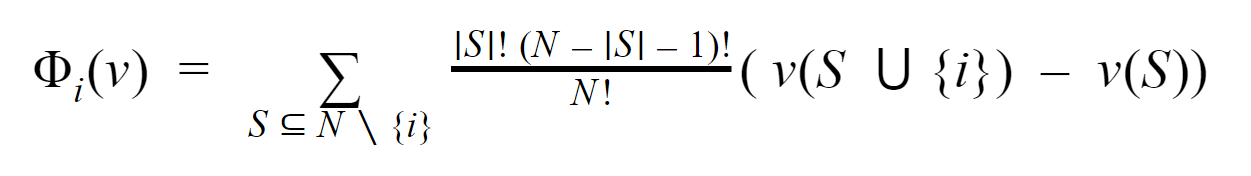

How Shapley Values are calculated:

Shapley values work by calculating an Average Marginal Contribution for a feature in the model. There is a Shapley value for an individual feature when there is a set N of n players and a function υ that maps subsets of features to the real numbers: υ: 2n à R, υ(Ø) = 0, Where Ø denotes the empty set

- S is a coalition of all features.

- υ(S) is the worth of coalition.

- υ is the Total expected sum of payoffs the features of S can obtain by cooperation.

- i is the feature notation.

The Tree Shap Method for Explaining Decision Tree Models

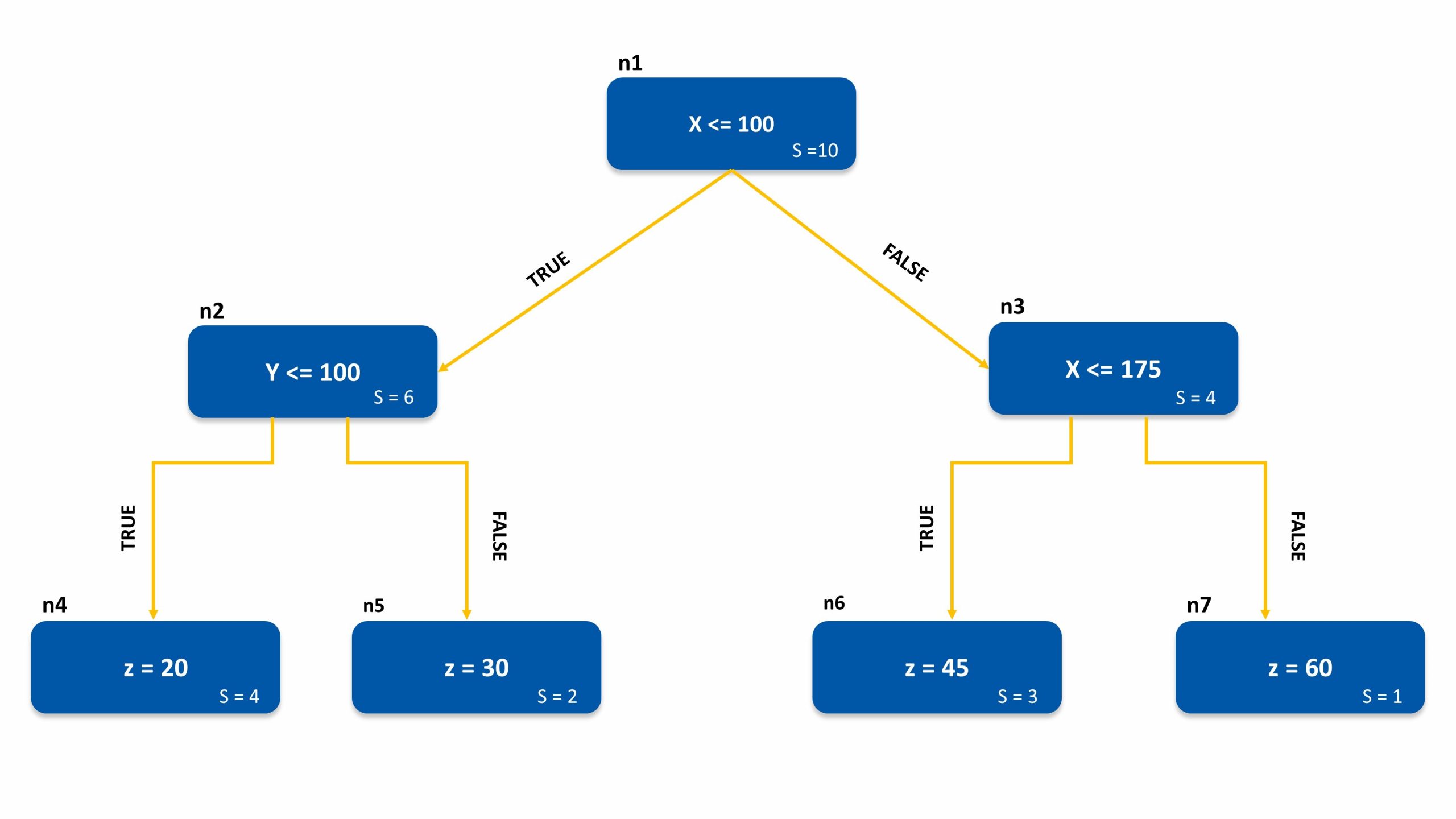

Consider the following decision tree with a total of ten random samples:

- X and Y are the independent variables.

- z is the output variable.

- n is the node notation.

- S is the number of samples for the respective node.

Different permutations and combinations of the independent variables X and Y are taken separately to calculate the feature importance using Shapley values.

Let’s compute the Shap values for the selected instance (i) X = 130, Y = 65. The Output for this instance is 45.

The prediction of the null model ϕ0 = (20*4 +30*2 + 45*3 + 60)/10 = 33.5

Consider the Sequence X > Y

- X is added to the null model first. For the selected instance, the marginal contribution of X is ϕx1 = 45 – 33.5 = 11.5 (from node n6).

- Now Y is added to the model. Adding Y does not alter the prediction; hence the marginal contribution of Y ϕy1 = 45 – 45 = 0.

Consider the Sequence Y > X

- Y is added to the null model first, but the node n1 uses only X and hence the prediction = (6/10) * (contribution from child node n2) + (4/10) * (contribution from child node n3).

- From node n2: n2 has the Y variable; hence the prediction is = 20.

- From node n3: n3 still does not have the X variable the prediction = (3/4) * (45) + (1/4) * (60) = 48.75.

- Total prediction with just Y is (6/10) * (20) + (4/10) * (48.75) = 31.5, and marginal contribution of Y is ϕy2 = 31.5 – 33.5 = -2.

- Now X is also added to the model, which gives a prediction of 45; the marginal contribution of X is ϕx2 = 45- 31.5 = 13.5

Final SHAP Values:

ϕx =( ϕx1 + ϕx1 )/2 = (11.5 + 13.5)/2 = 12.5

ϕy =( ϕy1 + ϕy1 )/2= (0 – 2)/2 = -1

The prediction for the instance i can be explained by = ϕ0 + ϕx + ϕy = 33.5 + 12.5 – 1 = 45, Thus explaining the feature prediction i

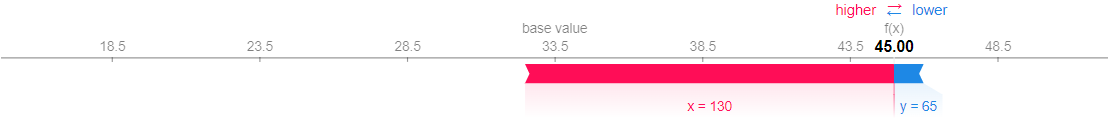

Force plot of the above decision tree explained using SHAP values.

Here blue indicates that the Y value decreased the prediction. Red indicates the X value increased the prediction.

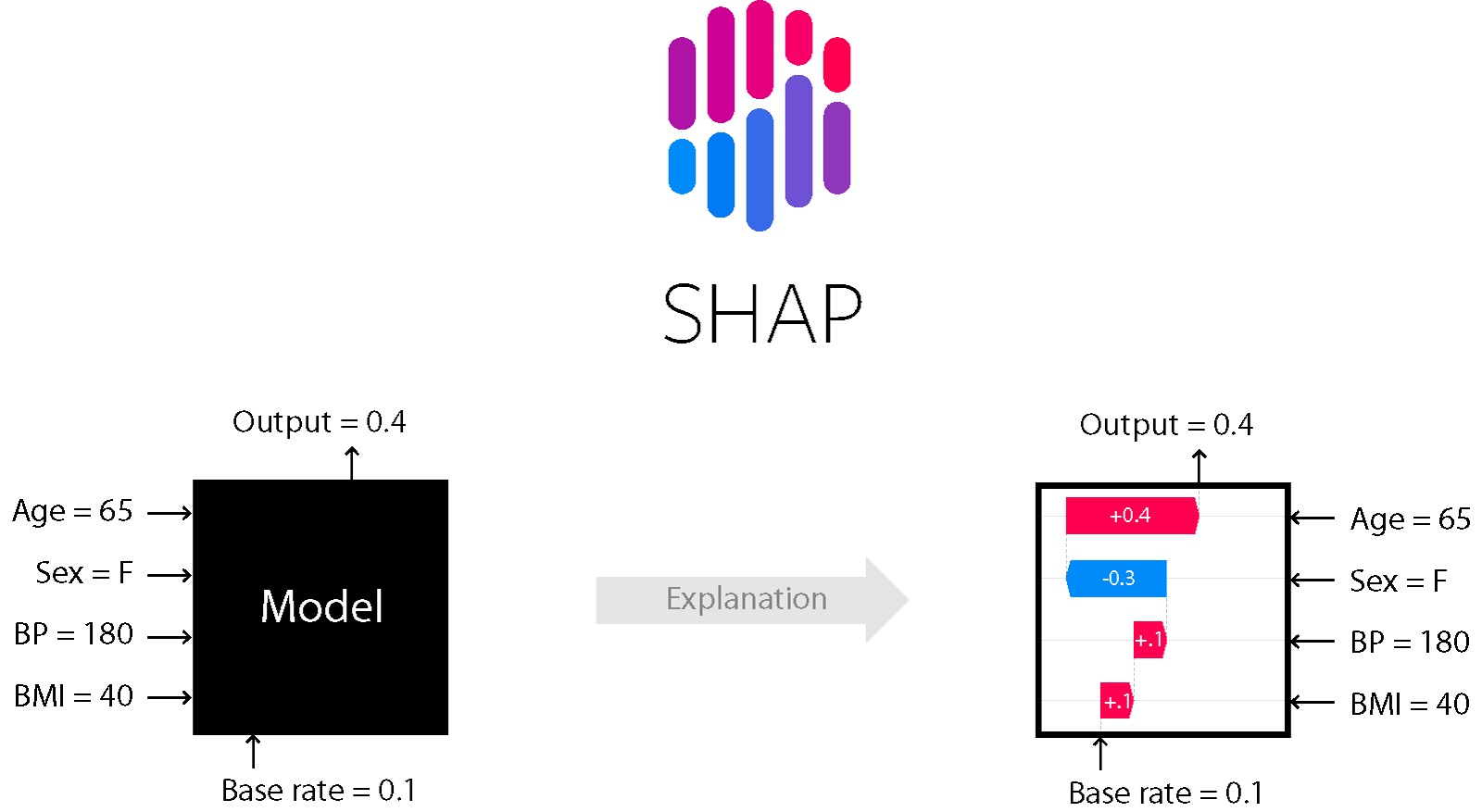

Summarizing the SHAP method as a tool

Conclusion

- Shapley values are the only attribution method that satisfies efficiency, symmetry, dummy, and additivity.

- Similar to the above calculations, the same process can be applied for other machine learning algorithms, such as deep neural networks, random forest, ensemble methods, etc.

- The Python package SHAP offers an array of tools to better visualize and understand black box models.

- Thus, Shapley values provide explainability and interpretability to ML algorithms.

References

- Investopedia.com: Shapley Value Definition

- Wikipedia.org: Shapley Value Calculation

- SHAP: A Unified Approach to Interpreting Model Predictions. arXiv:1705.07874

- SHAP Documentation

Authored by: Vishnu Prasath, Consultant at Absolutdata